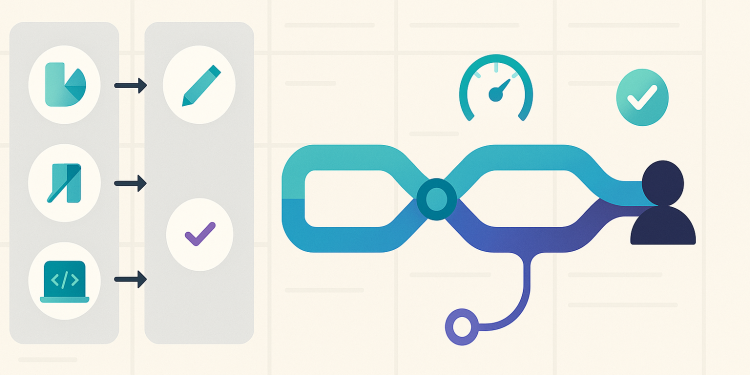

If you’ve ever watched a promising idea sink into a swamp of handoffs and status meetings, you know the problem isn’t talent—it’s topology. When work snakes across analysis → design → engineering → QA → security → legal → release, each hop adds delay, ambiguity, and rework. The fix isn’t shouting “do agile harder.” It’s reshaping the system so teams map to how value actually reaches customers.

You can see this principle in any short-feedback market. Operators in fast-moving digital niches—adjacent to non gamstop casino sites UK, for example—organise around the customer journey rather than job titles because conversion, retention, and risk signals must move together. Different domain, same lesson: when your unit of organisation matches your unit of value, flow accelerates and quality improves.

What “Flow” Really Means

Flow is the smooth, continuous movement of ideas into customer impact with minimal waiting, rework, and handoffs. It thrives when teams own outcomes end-to-end, shorten feedback loops, and have the skills to ship safely without external queues.

Why flow beats “throughput theater”:

- Fewer handoffs → fewer queues and less rework

- Shorter loops → faster judgment and better bets

- Clear ownership → issues stop bouncing around

Step 1: Map Value Streams (Not the Org Chart)

Start with an hour-long mapping session:

- Name the outcome. e.g., “Reduce time-to-first-value by 20%.”

- Sketch the journey. Awareness → signup → first value → habit → expansion.

- Overlay real work. Research, UX, FE/BE, data, QA, compliance, release, support.

- Circle delays. Approvals, environment setup, data access, sign-offs.

- Propose streams. Typically 3–7 such as Acquisition, Onboarding, Payments, Core Experience, Risk & Trust.

The stream is your smallest unit of customer value that a single team can deliver end-to-end.

Step 2: Stand Up Long-Lived, Cross-Functional Teams

A value-stream team should have:

- Mandate: One measurable outcome (e.g., time-to-first-value < 3 minutes).

- Scope: Clear surfaces and decision rights.

- People: Product, design, FE/BE engineering, data, QA; security/compliance embedded or on reliable cadence.

- Cadence: Weekly outcome reviews; monthly strategy updates; quarterly “kill/scale” portfolio decisions.

- Backlog: Delivery and discovery (prototypes, interviews, data dives) with WIP limits.

Leader’s job: Be tight on purpose, loose on method—teams choose the how.

Step 3: Replace Handoffs with Interfaces

Where streams meet, make boundaries explicit and automated:

- APIs with contracts & SLOs (plus synthetic tests that fail loud).

- Shared design tokens/components to keep UX consistent without committees.

- Data contracts with schemas, lineage, and access rules—no mystery CSVs.

- Runbooks with DRIs and “what to do when X breaks.”

Step 4: Make Learning Loops Deliberate

Flow without learning is just faster guessing.

- Dual-track work: Discovery items flow alongside delivery with their own “done” (evidence).

- Pre-declared success criteria: Every bet has leading indicators agreed up front.

- Instrumentation everywhere: Dashboards update automatically; decisions follow data.

- Rituals that matter: Retros with actions, demos that drive decisions, blameless postmortems that change the system.

Step 5: Fund Teams, Not Projects

Keep teams stable and flex what they do:

- H1 (0–3 months): Committed, tightly scoped bets with metrics.

- H2 (3–9 months): Options with stage gates—scale or kill by signal.

- H3 (9–18 months): Themes only; avoid false precision.

Quarterly, reallocate capacity based on evidence, not sunk cost.

Metrics That Make Flow Visible

Track both outcomes and health (flow/quality):

Outcome (customer/business)

- Activation rate; time-to-first-value

- Retention (D7/D30); CSAT/NPS

- Revenue per user; cost to serve

Flow & Quality (engineering health)

- Lead time for change

- Deployment frequency

- Change failure rate; escape rate

- MTTR; incident count by severity

- WIP; queue time per stage

Each team owns a one-page scorecard with targets, trends, and the next three interventions.

Anti-Patterns to Retire (and What to Do Instead)

- Central QA as final gate → Shift-left testing, contract tests, prod-like staging owned by the team.

- Weekly approval boards → Policy-as-code and asynchronous written decisions with SLAs.

- Hero culture → Resilient systems, clear DRIs, and runbooks beat late-night heroics.

- Metrics theater → Tie every metric to a decision rule (“If X moves, we do Y”); drop the rest.

A Lightweight 90-Day Plan

Days 0–30: Map streams. Write team charters. Publish a service catalog (design system, CI/CD, observability, data platform). Simplify interfaces.

Days 31–60: Staff stable teams (no fractional allocations). Launch dual-track flow. Add guardrails (secure defaults, quality gates, paved release paths).

Days 61–90: Run 3–5 measurable bets per team. Review scorecards weekly. Kill/scale based on signal. Share learnings org-wide.

Closing Thought

Silos optimise for local efficiency. Value streams optimise for customer impact. If you want fewer meetings, calmer releases, and faster value, design for flow: map the journey, create stable cross-functional teams with real decision rights, automate your interfaces, and let disciplined learning loops steer the roadmap. Do that, and “agile” stops being a ceremony—and starts being how your organisation naturally works.